Thiago Hersan

commited on

Commit

•

988ebda

1

Parent(s):

15a1214

adds app and dockerfile files

Browse files- .gitignore +2 -0

- Dockerfile +12 -0

- README.md +1 -1

- app.py +100 -0

- examples/map-000.jpg +0 -0

- examples/map-010.jpg +0 -0

- examples/map-018.jpg +0 -0

- examples/map-114.jpg +0 -0

- requirements.txt +6 -0

.gitignore

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.DS_Store

|

| 2 |

+

|

Dockerfile

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.8.15

|

| 2 |

+

|

| 3 |

+

WORKDIR /code

|

| 4 |

+

|

| 5 |

+

COPY ./requirements.txt /code/requirements.txt

|

| 6 |

+

|

| 7 |

+

RUN pip install --no-cache-dir --upgrade -r /code/requirements.txt

|

| 8 |

+

|

| 9 |

+

COPY app.py /code/app.py

|

| 10 |

+

COPY examples /code/examples

|

| 11 |

+

|

| 12 |

+

CMD ["python", "app.py"]

|

README.md

CHANGED

|

@@ -1,6 +1,6 @@

|

|

| 1 |

---

|

| 2 |

title: Maskformer Satellite Trees Gradio Docker

|

| 3 |

-

emoji:

|

| 4 |

colorFrom: pink

|

| 5 |

colorTo: green

|

| 6 |

sdk: docker

|

|

|

|

| 1 |

---

|

| 2 |

title: Maskformer Satellite Trees Gradio Docker

|

| 3 |

+

emoji: 🛰🐳

|

| 4 |

colorFrom: pink

|

| 5 |

colorTo: green

|

| 6 |

sdk: docker

|

app.py

ADDED

|

@@ -0,0 +1,100 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import glob

|

| 2 |

+

import gradio as gr

|

| 3 |

+

import numpy as np

|

| 4 |

+

from os import environ

|

| 5 |

+

from PIL import Image

|

| 6 |

+

from torchvision import transforms as T

|

| 7 |

+

from transformers import MaskFormerForInstanceSegmentation, MaskFormerImageProcessor

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

example_images = sorted(glob.glob('examples/map*.jpg'))

|

| 11 |

+

|

| 12 |

+

ade_mean=[0.485, 0.456, 0.406]

|

| 13 |

+

ade_std=[0.229, 0.224, 0.225]

|

| 14 |

+

|

| 15 |

+

test_transform = T.Compose([

|

| 16 |

+

T.ToTensor(),

|

| 17 |

+

T.Normalize(mean=ade_mean, std=ade_std)

|

| 18 |

+

])

|

| 19 |

+

|

| 20 |

+

palette = [

|

| 21 |

+

[120, 120, 120], [4, 200, 4], [4, 4, 250], [6, 230, 230],

|

| 22 |

+

[80, 50, 50], [120, 120, 80], [140, 140, 140], [204, 5, 255]

|

| 23 |

+

]

|

| 24 |

+

|

| 25 |

+

model_id = f"thiagohersan/maskformer-satellite-trees"

|

| 26 |

+

vegetation_labels = ["vegetation"]

|

| 27 |

+

|

| 28 |

+

# preprocessor = MaskFormerImageProcessor.from_pretrained(model_id)

|

| 29 |

+

preprocessor = MaskFormerImageProcessor(

|

| 30 |

+

do_resize=False,

|

| 31 |

+

do_normalize=False,

|

| 32 |

+

do_rescale=False,

|

| 33 |

+

ignore_index=255,

|

| 34 |

+

reduce_labels=False

|

| 35 |

+

)

|

| 36 |

+

|

| 37 |

+

hf_token = environ.get('HFTOKEN')

|

| 38 |

+

model = MaskFormerForInstanceSegmentation.from_pretrained(model_id, use_auth_token=hf_token)

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

def visualize_instance_seg_mask(img_in, mask, id2label, included_labels):

|

| 42 |

+

img_out = np.zeros((mask.shape[0], mask.shape[1], 3))

|

| 43 |

+

image_total_pixels = mask.shape[0] * mask.shape[1]

|

| 44 |

+

label_ids = np.unique(mask)

|

| 45 |

+

|

| 46 |

+

id2color = {id: palette[id] for id in label_ids}

|

| 47 |

+

id2count = {id: 0 for id in label_ids}

|

| 48 |

+

|

| 49 |

+

for i in range(img_out.shape[0]):

|

| 50 |

+

for j in range(img_out.shape[1]):

|

| 51 |

+

img_out[i, j, :] = id2color[mask[i, j]]

|

| 52 |

+

id2count[mask[i, j]] = id2count[mask[i, j]] + 1

|

| 53 |

+

|

| 54 |

+

image_res = (0.5 * img_in + 0.5 * img_out).astype(np.uint8)

|

| 55 |

+

|

| 56 |

+

dataframe = [[

|

| 57 |

+

f"{id2label[id]}",

|

| 58 |

+

f"{(100 * id2count[id] / image_total_pixels):.2f} %",

|

| 59 |

+

f"{np.sqrt(id2count[id] / image_total_pixels):.2f} m"

|

| 60 |

+

] for id in label_ids if id2label[id] in included_labels]

|

| 61 |

+

|

| 62 |

+

if len(dataframe) < 1:

|

| 63 |

+

dataframe = [[

|

| 64 |

+

f"",

|

| 65 |

+

f"{(0):.2f} %",

|

| 66 |

+

f"{(0):.2f} m"

|

| 67 |

+

]]

|

| 68 |

+

|

| 69 |

+

return image_res, dataframe

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

def query_image(image_path):

|

| 73 |

+

img = np.array(Image.open(image_path))

|

| 74 |

+

img_size = (img.shape[0], img.shape[1])

|

| 75 |

+

inputs = preprocessor(images=test_transform(img), return_tensors="pt")

|

| 76 |

+

outputs = model(**inputs)

|

| 77 |

+

results = preprocessor.post_process_semantic_segmentation(outputs=outputs, target_sizes=[img_size])[0]

|

| 78 |

+

mask_img, dataframe = visualize_instance_seg_mask(img, results.numpy(), model.config.id2label, vegetation_labels)

|

| 79 |

+

return mask_img, dataframe

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

demo = gr.Interface(

|

| 83 |

+

title="Maskformer Satellite+Trees",

|

| 84 |

+

description="Using a finetuned version of the [facebook/maskformer-swin-base-ade](https://huggingface.co/facebook/maskformer-swin-base-ade) model (created specifically to work with satellite images) to calculate percentage of pixels in an image that belong to vegetation.",

|

| 85 |

+

|

| 86 |

+

fn=query_image,

|

| 87 |

+

inputs=[gr.Image(type="filepath", label="Input Image")],

|

| 88 |

+

outputs=[

|

| 89 |

+

gr.Image(label="Vegetation"),

|

| 90 |

+

gr.DataFrame(label="Info", headers=["Object Label", "Pixel Percent", "Square Length"])

|

| 91 |

+

],

|

| 92 |

+

|

| 93 |

+

examples=example_images,

|

| 94 |

+

cache_examples=True,

|

| 95 |

+

|

| 96 |

+

allow_flagging="never",

|

| 97 |

+

analytics_enabled=None

|

| 98 |

+

)

|

| 99 |

+

|

| 100 |

+

demo.launch(show_api=False)

|

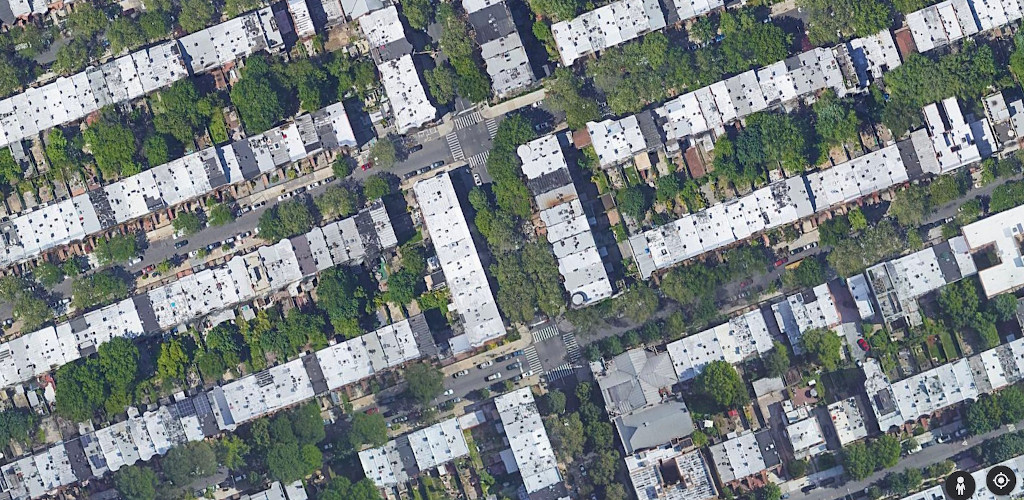

examples/map-000.jpg

ADDED

|

examples/map-010.jpg

ADDED

|

examples/map-018.jpg

ADDED

|

examples/map-114.jpg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio==3.16.2

|

| 2 |

+

Pillow==9.4.0

|

| 3 |

+

scipy==1.9.3

|

| 4 |

+

torch==1.13.1

|

| 5 |

+

torchvision==0.14.1

|

| 6 |

+

transformers==4.25.1

|