tangchangli

commited on

Commit

•

7cf7820

1

Parent(s):

3415c92

chore: init repo

Browse files- .gitattributes +1 -0

- .gitignore +163 -0

- LICENSE +201 -0

- README.md +77 -0

- beats/BEATs.py +180 -0

- beats/LICENSE_beats +21 -0

- beats/Tokenizers.py +172 -0

- beats/__init__.py +0 -0

- beats/backbone.py +783 -0

- beats/modules.py +218 -0

- beats/quantizer.py +215 -0

- cli_inference.py +53 -0

- model.py +251 -0

- other_third-party_licenses/LICENSE_vicuna +201 -0

- other_third-party_licenses/LICENSE_whisper +21 -0

- qformer/LICENSE_Lavis +14 -0

- qformer/LICENSE_MiniGPT4 +14 -0

- qformer/LICENSE_VideoLlama +28 -0

- qformer/Qformer.py +1217 -0

- requirements.txt +10 -0

- resource/audio_demo/duck.wav +0 -0

- resource/audio_demo/excitement.wav +0 -0

- resource/audio_demo/gunshots.wav +0 -0

- resource/audio_demo/mountain.wav +0 -0

- resource/audio_demo/music.wav +0 -0

- resource/response_demo/aac.png +0 -0

- resource/response_demo/aed.png +0 -0

- resource/response_demo/asr.png +0 -0

- resource/response_demo/emo.png +0 -0

- resource/response_demo/jsac.png +0 -0

- resource/response_demo/lyrics.png +0 -0

- resource/response_demo/mc.png +0 -0

- resource/response_demo/memo.png +0 -0

- resource/response_demo/pr.png +0 -0

- resource/response_demo/sac.png +0 -0

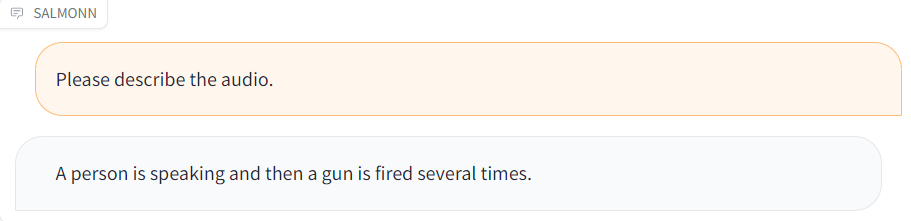

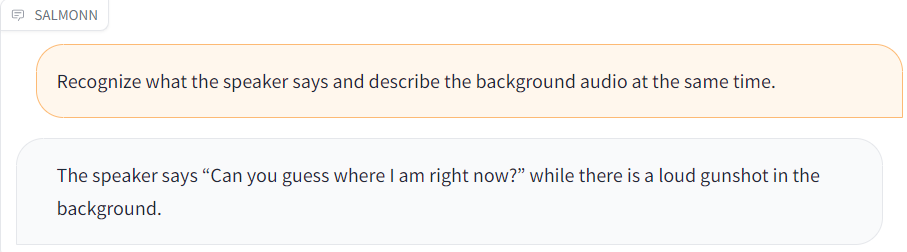

- resource/response_demo/sq.png +0 -0

- resource/response_demo/sr.png +0 -0

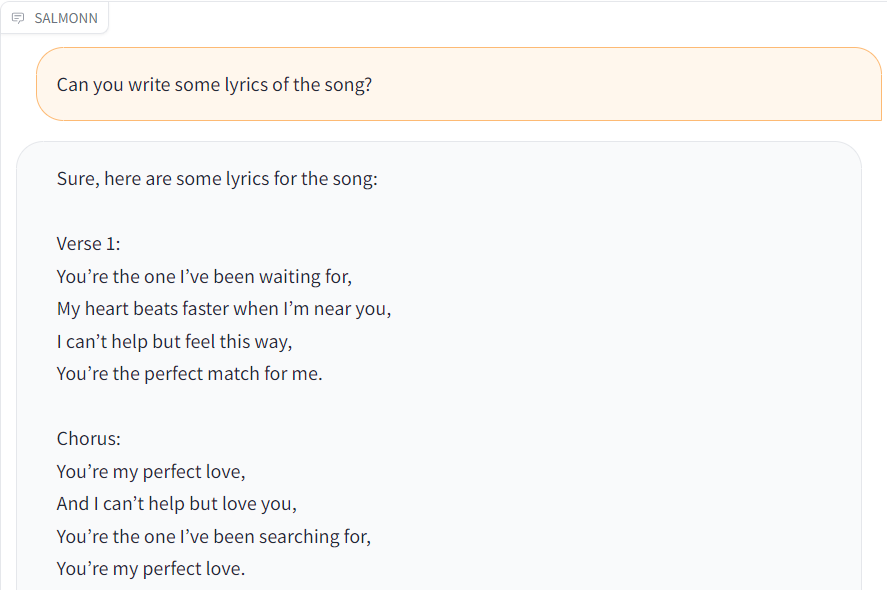

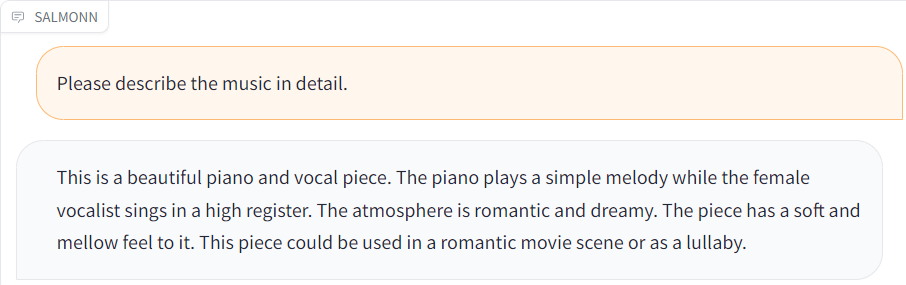

- resource/response_demo/story.png +0 -0

- resource/response_demo/title.png +0 -0

- resource/salmon.png +3 -0

- resource/structure.png +0 -0

- salmonn_7b_v0.pth +3 -0

- web_demo.py +166 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

resource/salmon.png filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,163 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

share/python-wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# PyInstaller

|

| 30 |

+

# Usually these files are written by a python script from a template

|

| 31 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 32 |

+

*.manifest

|

| 33 |

+

*.spec

|

| 34 |

+

|

| 35 |

+

# Installer logs

|

| 36 |

+

pip-log.txt

|

| 37 |

+

pip-delete-this-directory.txt

|

| 38 |

+

|

| 39 |

+

# Unit test / coverage reports

|

| 40 |

+

htmlcov/

|

| 41 |

+

.tox/

|

| 42 |

+

.nox/

|

| 43 |

+

.coverage

|

| 44 |

+

.coverage.*

|

| 45 |

+

.cache

|

| 46 |

+

nosetests.xml

|

| 47 |

+

coverage.xml

|

| 48 |

+

*.cover

|

| 49 |

+

*.py,cover

|

| 50 |

+

.hypothesis/

|

| 51 |

+

.pytest_cache/

|

| 52 |

+

cover/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

.pybuilder/

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 87 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 88 |

+

# .python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

#Pipfile.lock

|

| 96 |

+

|

| 97 |

+

# poetry

|

| 98 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 99 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 100 |

+

# commonly ignored for libraries.

|

| 101 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 102 |

+

#poetry.lock

|

| 103 |

+

|

| 104 |

+

# pdm

|

| 105 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 106 |

+

#pdm.lock

|

| 107 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 108 |

+

# in version control.

|

| 109 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 110 |

+

.pdm.toml

|

| 111 |

+

|

| 112 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 113 |

+

__pypackages__/

|

| 114 |

+

|

| 115 |

+

# Celery stuff

|

| 116 |

+

celerybeat-schedule

|

| 117 |

+

celerybeat.pid

|

| 118 |

+

|

| 119 |

+

# SageMath parsed files

|

| 120 |

+

*.sage.py

|

| 121 |

+

|

| 122 |

+

# Environments

|

| 123 |

+

.env

|

| 124 |

+

.venv

|

| 125 |

+

env/

|

| 126 |

+

venv/

|

| 127 |

+

ENV/

|

| 128 |

+

env.bak/

|

| 129 |

+

venv.bak/

|

| 130 |

+

|

| 131 |

+

# Spyder project settings

|

| 132 |

+

.spyderproject

|

| 133 |

+

.spyproject

|

| 134 |

+

|

| 135 |

+

# Rope project settings

|

| 136 |

+

.ropeproject

|

| 137 |

+

|

| 138 |

+

# mkdocs documentation

|

| 139 |

+

/site

|

| 140 |

+

|

| 141 |

+

# mypy

|

| 142 |

+

.mypy_cache/

|

| 143 |

+

.dmypy.json

|

| 144 |

+

dmypy.json

|

| 145 |

+

|

| 146 |

+

# Pyre type checker

|

| 147 |

+

.pyre/

|

| 148 |

+

|

| 149 |

+

# pytype static type analyzer

|

| 150 |

+

.pytype/

|

| 151 |

+

|

| 152 |

+

# Cython debug symbols

|

| 153 |

+

cython_debug/

|

| 154 |

+

|

| 155 |

+

# PyCharm

|

| 156 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 157 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 158 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 159 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 160 |

+

#.idea/

|

| 161 |

+

|

| 162 |

+

**/.DS_Store

|

| 163 |

+

launch.sh

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright Changli Tang

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.md

CHANGED

|

@@ -1,3 +1,80 @@

|

|

| 1 |

---

|

| 2 |

license: apache-2.0

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: apache-2.0

|

| 3 |

+

tags:

|

| 4 |

+

- audio

|

| 5 |

+

- speech

|

| 6 |

+

- music

|

| 7 |

---

|

| 8 |

+

|

| 9 |

+

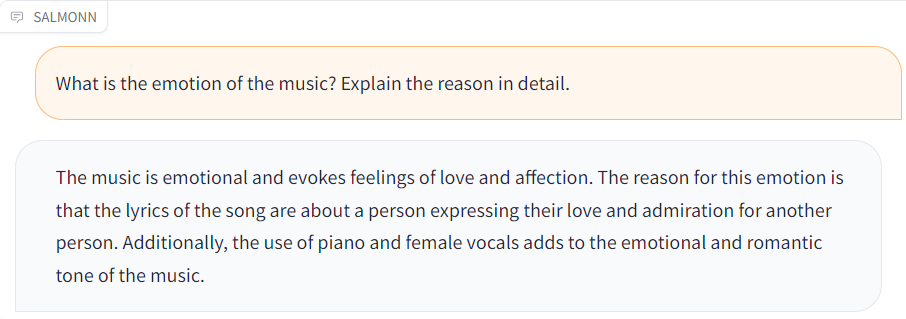

# SALMONN: Speech Audio Language Music Open Neural Network

|

| 10 |

+

|

| 11 |

+

<div align=center><img src="resource/salmon.png" height="256px" width="256px"/></div>

|

| 12 |

+

|

| 13 |

+

🚀🚀 Welcome to the repo of **SALMONN**!

|

| 14 |

+

|

| 15 |

+

SALMONN is a large language model (LLM) enabling **speech, audio events, and music inputs**, which is developed by the Department of Electronic Engineering at Tsinghua University and ByteDance. Instead of speech-only input or audio-event-only input, SALMONN can perceive and understand all kinds of audio inputs and therefore obtain emerging capabilities such as multilingual speech recognition & translation and audio-speech co-reasoning. This can be regarded as giving the LLM "ears" and cognitive hearing abilities, which makes SALMONN a step towards hearing-enabled artificial general intelligence.

|

| 16 |

+

|

| 17 |

+

<div style='display:flex; gap: 0.25rem; '>

|

| 18 |

+

<a href='https://bytedance.github.io/SALMONN/'><img src='https://img.shields.io/badge/SALMONN_13B-Demo-blue'></a>

|

| 19 |

+

<a href='https://huggingface.co/spaces/tsinghua-ee/SALMONN-7B-gradio'><img src='https://img.shields.io/badge/SALMONN_7B-Demo-orange'></a>

|

| 20 |

+

<a href='https://arxiv.org/pdf/2310.13289.pdf'><img src='https://img.shields.io/badge/paper-PDF-green'></a>

|

| 21 |

+

<a href='https://huggingface.co/tsinghua-ee/SALMONN'><img src='https://img.shields.io/badge/huggingface-checkpoint-yellow'></a>

|

| 22 |

+

</div>

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

## News

|

| 26 |

+

|

| 27 |

+

- [10-08] ✨ We have released [**the model checkpoint**](https://huggingface.co/tsinghua-ee/SALMONN) and **the inference code** for SALMONN-13B!

|

| 28 |

+

- [11-13] 🎁 We have released a **7B version of SALMONN** at [tsinghua-ee/SALMONN-7B](https://huggingface.co/tsinghua-ee/SALMONN-7B) and built the 7B demo [here](https://huggingface.co/spaces/tsinghua-ee/SALMONN-7B-gradio)!

|

| 29 |

+

|

| 30 |

+

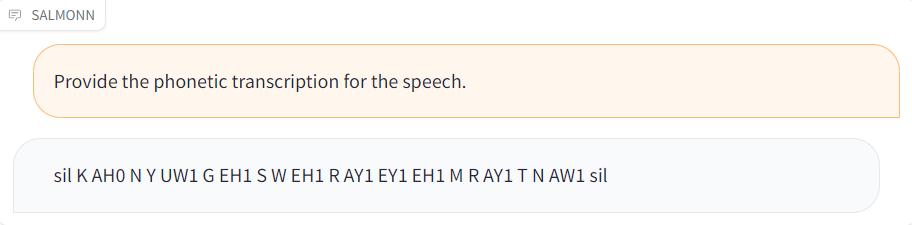

## Structure

|

| 31 |

+

|

| 32 |

+

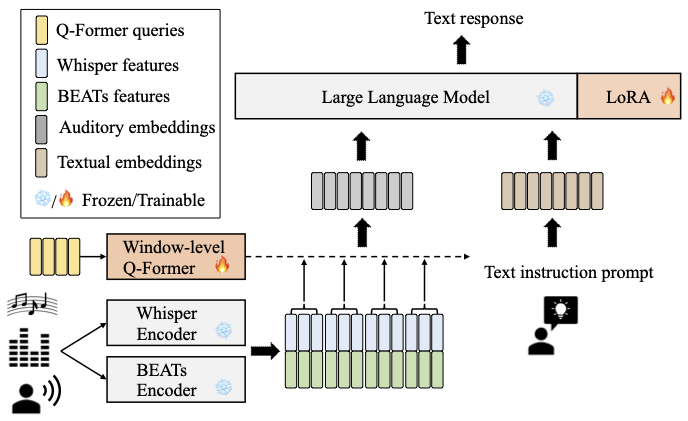

The model architecture of SALMONN is shown below. A window-level Q-Former is used as the connection module to fuse the outputs from a Whisper speech encoder and a BEATs audio encoder as augmented audio tokens, which are aligned with the LLM input space. The LoRA adaptor aligns the augmented LLM input space with its output space. The text prompt is used to instruct SALMONN to answer open-ended questions about the general audio inputs and the answers are in the LLM text responses.

|

| 33 |

+

|

| 34 |

+

<div align=center><img src="resource/structure.png" height="100%" width="75%"/></div>

|

| 35 |

+

|

| 36 |

+

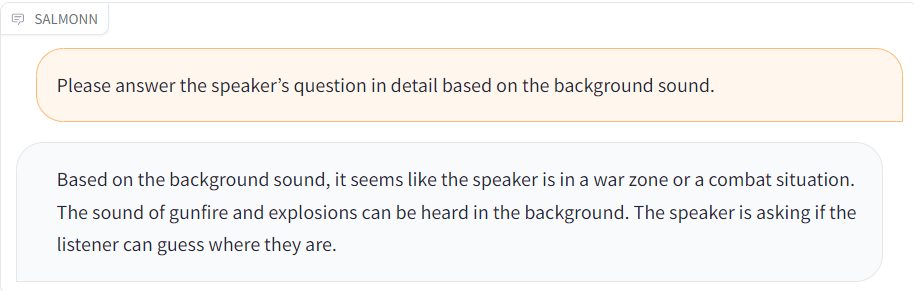

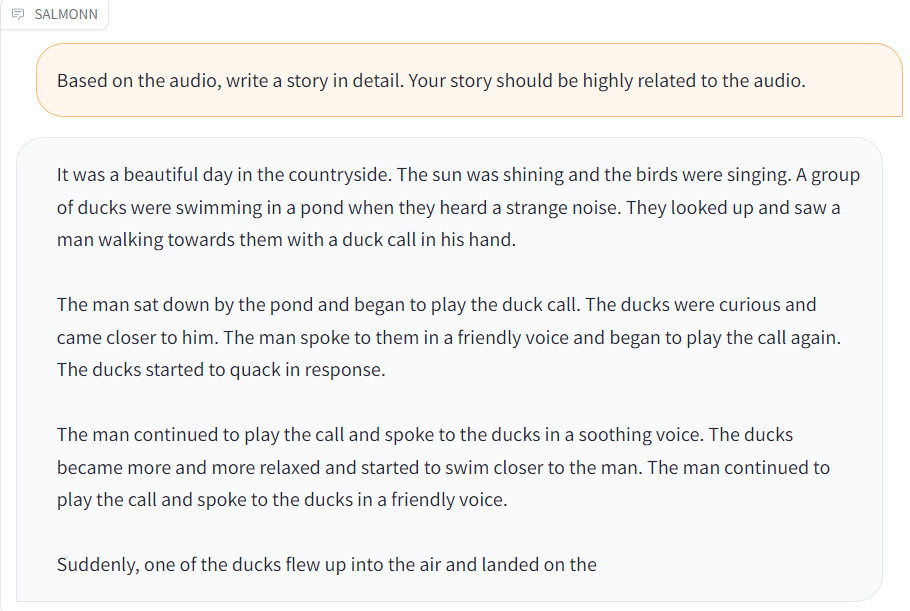

## Demos

|

| 37 |

+

|

| 38 |

+

Compared with traditional speech and audio processing tasks such as speech recognition and audio caption, SALMONN leverages the general knowledge and cognitive abilities of the LLM to achieve a cognitively oriented audio perception, which dramatically improves the versatility of the model and the richness of the task. In addition, SALMONN is able to follow textual commands, and even spoken commands, with a relatively high degree of accuracy. Since SALMONN only uses training data based on textual commands, listening to spoken commands is also a cross-modal emergent ability.

|

| 39 |

+

|

| 40 |

+

Here are some examples of SALMONN.

|

| 41 |

+

|

| 42 |

+

| Audio | Response |

|

| 43 |

+

| ------------------------------------------------------ | -------------------------------------------- |

|

| 44 |

+

| [gunshots.wav](./resource/audio_demo/gunshots.wav) |  |

|

| 45 |

+

| [duck.wav](./resource/audio_demo/duck.wav) |  |

|

| 46 |

+

| [music.wav](./resource/audio_demo/music.wav) |  |

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

## How to inference in CLI

|

| 50 |

+

|

| 51 |

+

For SALMONN-7B v0, you need to use the following dependencies:

|

| 52 |

+

|

| 53 |

+

1. Our environment: The python version is 3.9.17, and other required packages can be installed with the following command: ```pip install -r requirements.txt```.

|

| 54 |

+

2. Download [whisper large v2](https://huggingface.co/openai/whisper-large-v2/tree/main) to ```whisper_path```.

|

| 55 |

+

3. Download [Fine-tuned BEATs_iter3+ (AS2M) (cpt2)](https://valle.blob.core.windows.net/share/BEATs/BEATs_iter3_plus_AS2M_finetuned_on_AS2M_cpt2.pt?sv=2020-08-04&st=2023-03-01T07%3A51%3A05Z&se=2033-03-02T07%3A51%3A00Z&sr=c&sp=rl&sig=QJXmSJG9DbMKf48UDIU1MfzIro8HQOf3sqlNXiflY1I%3D) to `beats_path`.

|

| 56 |

+

4. Download [vicuna 7B v1.5](https://huggingface.co/lmsys/vicuna-7b-v1.5/tree/main) to ```vicuna_path```.

|

| 57 |

+

5. Download [salmonn-7b v0](https://huggingface.co/tsinghua-ee/SALMONN-7B/blob/main/salmonn_7b_v0.pth) to ```ckpt_path```.

|

| 58 |

+

6. Running with ```python3 cli_inference.py --ckpt_path xxx --whisper_path xxx --beats_path xxx --vicuna_path xxx``` to start cli inference. Please make sure your GPU has more than 40G of memory. If your GPU does not have enough memory (e.g. only 24G), you can quantize the model using the `--low_resource` parameter to reduce the memory usage, and can reduce the LoRA scaling factor to maintain the model's emergent abilities, e.g. `--lora_alpha=28`.

|

| 59 |

+

|

| 60 |

+

## How to launch a web demo

|

| 61 |

+

|

| 62 |

+

1. Same as **How to inference in CLI: 1-5**.

|

| 63 |

+

2. Running with ```python3 web_demo.py --ckpt_path xxx --whisper_path xxx --beats_path xxx --vicuna_path xxx``` in A100-SXM-80GB. You can add `--low_resource` parameter if the GPU memory is not enough, and reduce the LoRA scaling factor to maintain the model's emergent abilities.

|

| 64 |

+

|

| 65 |

+

## Team

|

| 66 |

+

|

| 67 |

+

**Team Tsinghua**: Wenyi Yu, Changli Tang, Guangzhi Sun, Chao Zhang

|

| 68 |

+

|

| 69 |

+

**Team ByteDance**: Xianzhao Chen, Wei Li, Tian Tan, Lu Lu, Zejun Ma

|

| 70 |

+

|

| 71 |

+

## Citation

|

| 72 |

+

If you find SALMONN great and useful, please cite our paper:

|

| 73 |

+

```

|

| 74 |

+

@article{tang2023salmonn,

|

| 75 |

+

title={{SALMONN}: Towards Generic Hearing Abilities for Large Language Models},

|

| 76 |

+

author={Changli, Tang and Wenyi, Yu and Guangzhi, Sun and Xianzhao, Chen and Tian, Tan and Wei, Li and Lu, Lu and Zejun, Ma and Chao, Zhang},

|

| 77 |

+

journal={arXiv:2310.13289},

|

| 78 |

+

year={2023}

|

| 79 |

+

}

|

| 80 |

+

```

|

beats/BEATs.py

ADDED

|

@@ -0,0 +1,180 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# --------------------------------------------------------

|

| 2 |

+

# BEATs: Audio Pre-Training with Acoustic Tokenizers (https://arxiv.org/abs/2212.09058)

|

| 3 |

+

# Github source: https://github.com/microsoft/unilm/tree/master/beats

|

| 4 |

+

# Copyright (c) 2022 Microsoft

|

| 5 |

+

# Licensed under The MIT License [see LICENSE for details]

|

| 6 |

+

# Based on fairseq code bases

|

| 7 |

+

# https://github.com/pytorch/fairseq

|

| 8 |

+

# --------------------------------------------------------

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

import torch

|

| 12 |

+

import torch.nn as nn

|

| 13 |

+

from torch.nn import LayerNorm

|

| 14 |

+

import torchaudio.compliance.kaldi as ta_kaldi

|

| 15 |

+

|

| 16 |

+

from beats.backbone import (

|

| 17 |

+

TransformerEncoder,

|

| 18 |

+

)

|

| 19 |

+

|

| 20 |

+

import logging

|

| 21 |

+

from typing import Optional

|

| 22 |

+

|

| 23 |

+

logger = logging.getLogger(__name__)

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class BEATsConfig:

|

| 27 |

+

def __init__(self, cfg=None):

|

| 28 |

+

self.input_patch_size: int = -1 # path size of patch embedding

|

| 29 |

+

self.embed_dim: int = 512 # patch embedding dimension

|

| 30 |

+

self.conv_bias: bool = False # include bias in conv encoder

|

| 31 |

+

|

| 32 |

+

self.encoder_layers: int = 12 # num encoder layers in the transformer

|

| 33 |

+

self.encoder_embed_dim: int = 768 # encoder embedding dimension

|

| 34 |

+

self.encoder_ffn_embed_dim: int = 3072 # encoder embedding dimension for FFN

|

| 35 |

+

self.encoder_attention_heads: int = 12 # num encoder attention heads

|

| 36 |

+

self.activation_fn: str = "gelu" # activation function to use

|

| 37 |

+

|

| 38 |

+

self.layer_wise_gradient_decay_ratio: float = 1.0 # ratio for layer-wise gradient decay

|

| 39 |

+

self.layer_norm_first: bool = False # apply layernorm first in the transformer

|

| 40 |

+

self.deep_norm: bool = False # apply deep_norm first in the transformer

|

| 41 |

+

|

| 42 |

+

# dropouts

|

| 43 |

+

self.dropout: float = 0.1 # dropout probability for the transformer

|

| 44 |

+

self.attention_dropout: float = 0.1 # dropout probability for attention weights

|

| 45 |

+

self.activation_dropout: float = 0.0 # dropout probability after activation in FFN

|

| 46 |

+

self.encoder_layerdrop: float = 0.0 # probability of dropping a tarnsformer layer

|

| 47 |

+

self.dropout_input: float = 0.0 # dropout to apply to the input (after feat extr)

|

| 48 |

+

|

| 49 |

+

# positional embeddings

|

| 50 |

+

self.conv_pos: int = 128 # number of filters for convolutional positional embeddings

|

| 51 |

+

self.conv_pos_groups: int = 16 # number of groups for convolutional positional embedding

|

| 52 |

+

|

| 53 |

+

# relative position embedding

|

| 54 |

+

self.relative_position_embedding: bool = False # apply relative position embedding

|

| 55 |

+

self.num_buckets: int = 320 # number of buckets for relative position embedding

|

| 56 |

+

self.max_distance: int = 1280 # maximum distance for relative position embedding

|

| 57 |

+

self.gru_rel_pos: bool = False # apply gated relative position embedding

|

| 58 |

+

|

| 59 |

+

# label predictor

|

| 60 |

+

self.finetuned_model: bool = False # whether the model is a fine-tuned model.

|

| 61 |

+

self.predictor_dropout: float = 0.1 # dropout probability for the predictor

|

| 62 |

+

self.predictor_class: int = 527 # target class number for the predictor

|

| 63 |

+

|

| 64 |

+

if cfg is not None:

|

| 65 |

+

self.update(cfg)

|

| 66 |

+

|

| 67 |

+

def update(self, cfg: dict):

|

| 68 |

+

self.__dict__.update(cfg)

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

class BEATs(nn.Module):

|

| 72 |

+

def __init__(

|

| 73 |

+

self,

|

| 74 |

+

cfg: BEATsConfig,

|

| 75 |

+

) -> None:

|

| 76 |

+

super().__init__()

|

| 77 |

+

logger.info(f"BEATs Config: {cfg.__dict__}")

|

| 78 |

+

|

| 79 |

+

self.cfg = cfg

|

| 80 |

+

|

| 81 |

+

self.embed = cfg.embed_dim

|

| 82 |

+

self.post_extract_proj = (

|

| 83 |

+

nn.Linear(self.embed, cfg.encoder_embed_dim)

|

| 84 |

+

if self.embed != cfg.encoder_embed_dim

|

| 85 |

+

else None

|

| 86 |

+

)

|

| 87 |

+

|

| 88 |

+

self.input_patch_size = cfg.input_patch_size

|

| 89 |

+

self.patch_embedding = nn.Conv2d(1, self.embed, kernel_size=self.input_patch_size, stride=self.input_patch_size,

|

| 90 |

+

bias=cfg.conv_bias)

|

| 91 |

+

|

| 92 |

+

self.dropout_input = nn.Dropout(cfg.dropout_input)

|

| 93 |

+

|

| 94 |

+

assert not cfg.deep_norm or not cfg.layer_norm_first

|

| 95 |

+

self.encoder = TransformerEncoder(cfg)

|

| 96 |

+

self.layer_norm = LayerNorm(self.embed)

|

| 97 |

+

|

| 98 |

+

if cfg.finetuned_model:

|

| 99 |

+

self.predictor_dropout = nn.Dropout(cfg.predictor_dropout)

|

| 100 |

+

self.predictor = nn.Linear(cfg.encoder_embed_dim, cfg.predictor_class)

|

| 101 |

+

else:

|

| 102 |

+

self.predictor = None

|

| 103 |

+

|

| 104 |

+

def forward_padding_mask(

|

| 105 |

+

self,

|

| 106 |

+

features: torch.Tensor,

|

| 107 |

+

padding_mask: torch.Tensor,

|

| 108 |

+

) -> torch.Tensor:

|

| 109 |

+

extra = padding_mask.size(1) % features.size(1)

|

| 110 |

+

if extra > 0:

|

| 111 |

+

padding_mask = padding_mask[:, :-extra]

|

| 112 |

+

padding_mask = padding_mask.view(

|

| 113 |

+

padding_mask.size(0), features.size(1), -1

|

| 114 |

+

)

|

| 115 |

+

padding_mask = padding_mask.all(-1)

|

| 116 |

+

return padding_mask

|

| 117 |

+

|

| 118 |

+

def preprocess(

|

| 119 |

+

self,

|

| 120 |

+

source: torch.Tensor,

|

| 121 |

+

fbank_mean: float = 15.41663,

|

| 122 |

+

fbank_std: float = 6.55582,

|

| 123 |

+

) -> torch.Tensor:

|

| 124 |

+

fbanks = []

|

| 125 |

+

for waveform in source:

|

| 126 |

+

waveform = waveform.unsqueeze(0) * 2 ** 15

|

| 127 |

+

fbank = ta_kaldi.fbank(waveform, num_mel_bins=128, sample_frequency=16000, frame_length=25, frame_shift=10)

|

| 128 |

+

fbanks.append(fbank)

|

| 129 |

+

fbank = torch.stack(fbanks, dim=0)

|

| 130 |

+

fbank = (fbank - fbank_mean) / (2 * fbank_std)

|

| 131 |

+

return fbank

|

| 132 |

+

|

| 133 |

+

def extract_features(

|

| 134 |

+

self,

|

| 135 |

+

source: torch.Tensor,

|

| 136 |

+

padding_mask: Optional[torch.Tensor] = None,

|

| 137 |

+

fbank_mean: float = 15.41663,

|

| 138 |

+

fbank_std: float = 6.55582,

|

| 139 |

+

feature_only=False,

|

| 140 |

+

):

|

| 141 |

+

fbank = self.preprocess(source, fbank_mean=fbank_mean, fbank_std=fbank_std).to(torch.float32)

|

| 142 |

+

|

| 143 |

+

if padding_mask is not None:

|

| 144 |

+

padding_mask = self.forward_padding_mask(fbank, padding_mask)

|

| 145 |

+

|

| 146 |

+

fbank = fbank.unsqueeze(1)

|

| 147 |

+

features = self.patch_embedding(fbank)

|

| 148 |

+

features = features.reshape(features.shape[0], features.shape[1], -1)

|

| 149 |

+

features = features.transpose(1, 2)

|

| 150 |

+

features = self.layer_norm(features)

|

| 151 |

+

|

| 152 |

+

if padding_mask is not None:

|

| 153 |

+

padding_mask = self.forward_padding_mask(features, padding_mask)

|

| 154 |

+

|

| 155 |

+

if self.post_extract_proj is not None:

|

| 156 |

+

features = self.post_extract_proj(features)

|

| 157 |

+

|

| 158 |

+

x = self.dropout_input(features)

|

| 159 |

+

|

| 160 |

+

x, layer_results = self.encoder(

|

| 161 |

+

x,

|

| 162 |

+

padding_mask=padding_mask,

|

| 163 |

+

)

|

| 164 |

+

|

| 165 |

+

if not feature_only and self.predictor is not None:

|

| 166 |

+

x = self.predictor_dropout(x)

|

| 167 |

+

logits = self.predictor(x)

|

| 168 |

+

|

| 169 |

+

if padding_mask is not None and padding_mask.any():

|

| 170 |

+

logits[padding_mask] = 0

|

| 171 |

+

logits = logits.sum(dim=1)

|

| 172 |

+

logits = logits / (~padding_mask).sum(dim=1).unsqueeze(-1).expand_as(logits)

|

| 173 |

+

else:

|

| 174 |

+

logits = logits.mean(dim=1)

|

| 175 |

+

|

| 176 |

+

lprobs = torch.sigmoid(logits)

|

| 177 |

+

|

| 178 |

+

return lprobs, padding_mask

|

| 179 |

+

else:

|

| 180 |

+

return x, padding_mask

|

beats/LICENSE_beats

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

The MIT License (MIT)

|

| 2 |

+

|

| 3 |

+

Copyright (c) Microsoft Corporation

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

beats/Tokenizers.py

ADDED

|

@@ -0,0 +1,172 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# --------------------------------------------------------

|

| 2 |

+

# BEATs: Audio Pre-Training with Acoustic Tokenizers (https://arxiv.org/abs/2212.09058)

|

| 3 |

+

# Github source: https://github.com/microsoft/unilm/tree/master/beats

|

| 4 |

+

# Copyright (c) 2022 Microsoft

|

| 5 |

+

# Licensed under The MIT License [see LICENSE for details]

|

| 6 |

+

# Based on fairseq code bases

|

| 7 |

+

# https://github.com/pytorch/fairseq

|

| 8 |

+

# --------------------------------------------------------

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

import torch

|

| 12 |

+

import torch.nn as nn

|

| 13 |

+

from torch.nn import LayerNorm

|

| 14 |

+

import torchaudio.compliance.kaldi as ta_kaldi

|

| 15 |

+

|

| 16 |

+

from beats.backbone import (

|

| 17 |

+

TransformerEncoder,

|

| 18 |

+

)

|

| 19 |

+

from beats.quantizer import (

|

| 20 |

+

NormEMAVectorQuantizer,

|

| 21 |

+

)

|

| 22 |

+

|

| 23 |

+

import logging

|

| 24 |

+

from typing import Optional

|

| 25 |

+

|

| 26 |

+

logger = logging.getLogger(__name__)

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

class TokenizersConfig:

|

| 30 |

+

def __init__(self, cfg=None):

|

| 31 |

+

self.input_patch_size: int = -1 # path size of patch embedding

|

| 32 |

+

self.embed_dim: int = 512 # patch embedding dimension

|

| 33 |

+

self.conv_bias: bool = False # include bias in conv encoder

|

| 34 |

+

|

| 35 |

+

self.encoder_layers: int = 12 # num encoder layers in the transformer

|

| 36 |

+

self.encoder_embed_dim: int = 768 # encoder embedding dimension

|

| 37 |

+

self.encoder_ffn_embed_dim: int = 3072 # encoder embedding dimension for FFN

|

| 38 |

+

self.encoder_attention_heads: int = 12 # num encoder attention heads

|

| 39 |

+

self.activation_fn: str = "gelu" # activation function to use

|

| 40 |

+

|

| 41 |

+

self.layer_norm_first: bool = False # apply layernorm first in the transformer

|

| 42 |

+

self.deep_norm: bool = False # apply deep_norm first in the transformer

|

| 43 |

+

|

| 44 |

+

# dropouts

|

| 45 |

+

self.dropout: float = 0.1 # dropout probability for the transformer

|

| 46 |

+

self.attention_dropout: float = 0.1 # dropout probability for attention weights

|

| 47 |

+

self.activation_dropout: float = 0.0 # dropout probability after activation in FFN

|

| 48 |

+

self.encoder_layerdrop: float = 0.0 # probability of dropping a tarnsformer layer

|

| 49 |

+

self.dropout_input: float = 0.0 # dropout to apply to the input (after feat extr)

|

| 50 |

+

|

| 51 |

+

# positional embeddings

|

| 52 |

+

self.conv_pos: int = 128 # number of filters for convolutional positional embeddings

|

| 53 |

+

self.conv_pos_groups: int = 16 # number of groups for convolutional positional embedding

|

| 54 |

+

|

| 55 |

+

# relative position embedding

|

| 56 |

+

self.relative_position_embedding: bool = False # apply relative position embedding

|

| 57 |

+

self.num_buckets: int = 320 # number of buckets for relative position embedding

|

| 58 |

+

self.max_distance: int = 1280 # maximum distance for relative position embedding

|

| 59 |

+

self.gru_rel_pos: bool = False # apply gated relative position embedding

|

| 60 |

+

|

| 61 |

+

# quantizer

|

| 62 |

+

self.quant_n: int = 1024 # codebook number in quantizer

|

| 63 |

+

self.quant_dim: int = 256 # codebook dimension in quantizer

|

| 64 |

+

|

| 65 |

+

if cfg is not None:

|

| 66 |

+

self.update(cfg)

|

| 67 |

+

|

| 68 |

+

def update(self, cfg: dict):

|

| 69 |

+

self.__dict__.update(cfg)

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

class Tokenizers(nn.Module):

|

| 73 |

+

def __init__(

|

| 74 |

+

self,

|

| 75 |

+

cfg: TokenizersConfig,

|

| 76 |

+

) -> None:

|

| 77 |

+

super().__init__()

|

| 78 |

+

logger.info(f"Tokenizers Config: {cfg.__dict__}")

|

| 79 |

+

|

| 80 |

+

self.cfg = cfg

|

| 81 |

+

|

| 82 |

+

self.embed = cfg.embed_dim

|

| 83 |

+

self.post_extract_proj = (

|