Kreyòl-MT

Welcome to the repository for our from-scratch public-data model.

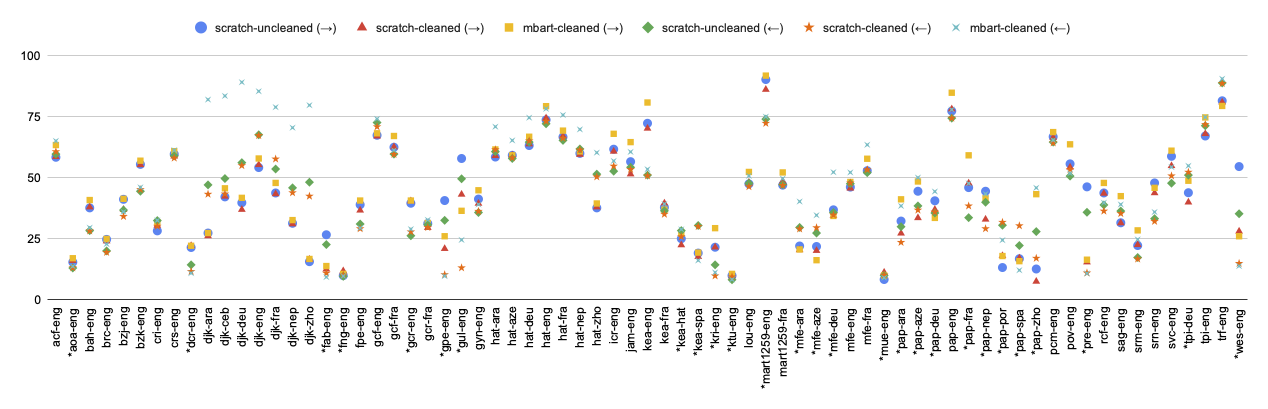

Please see our paper: 📄 "Kreyòl-MT: Building Machine Translation for Latin American, Caribbean, and Colonial African Creole Languages"

And our GitHub repository: 💻 Kreyòl-MT

And cite our work:

@article{robinson2024krey,

title={Krey$\backslash$ol-MT: Building MT for Latin American, Caribbean and Colonial African Creole Languages},

author={Robinson, Nathaniel R and Dabre, Raj and Shurtz, Ammon and Dent, Rasul and Onesi, Onenamiyi and Monroc, Claire Bizon and Grobol, Lo{\"\i}c and Muhammad, Hasan and Garg, Ashi and Etori, Naome A and others},

journal={arXiv preprint arXiv:2405.05376},

year={2024}

}

Model hosted here

This is a many-to-many model for translation into and out of Creole languages, trained from scratch on public data.

from transformers import MBartForConditionalGeneration, AutoModelForSeq2SeqLM

from transformers import AlbertTokenizer, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("jhu-clsp/kreyol-mt-scratch-pubtrain", do_lower_case=False, use_fast=False, keep_accents=True)

# The tokenizer we use is based on the AlbertTokenizer class which is essentially sentencepiece. We train this sentencepeice model from scratch.

# Or use tokenizer = AlbertTokenizer.from_pretrained("jhu-clsp/kreyol-mt-scratch-pubtrain", do_lower_case=False, use_fast=False, keep_accents=True)

model = AutoModelForSeq2SeqLM.from_pretrained("jhu-clsp/kreyol-mt-scratch-pubtrain")

# Or use model = MBartForConditionalGeneration.from_pretrained("jhu-clsp/kreyol-mt-scratch-pubtrain")

# Some initial mapping

bos_id = tokenizer._convert_token_to_id_with_added_voc("<s>")

eos_id = tokenizer._convert_token_to_id_with_added_voc("</s>")

pad_id = tokenizer._convert_token_to_id_with_added_voc("<pad>")

# First tokenize the input and outputs. The format below is how the model was trained so the input should be "Sentence </s> <2hwc>". Similarly, the output should be "<2eng> Sentence </s>".

# Example: For Saint Lucian Patois to English translation, we need to use language indicator tags: <2acf> and <2eng> where acf represents Saint Lucian Patois and eng represents English.

# The following language indicator tokens are usable: <2acf>, <2aoa>, <2ara>, <2aze>, <2bah>, <2brc>, <2bzj>, <2bzk>, <2cab>, <2ceb>, <2cri>, <2crs>, <2dcr>, <2deu>, <2djk>, <2eng>, <2fab>, <2fng>, <2fpe>, <2fra>, <2gcf>, <2gcr>, <2gpe>, <2gul>, <2gyn>, <2hat>, <2icr>, <2jam>, <2kea>, <2kri>, <2ktu>, <2lou>, <2mart1259>, <2mfe>, <2mue>, <2nep>, <2pap>, <2pcm>, <2por>, <2pov>, <2pre>, <2rcf>, <2sag>, <2spa>, <2srm>, <2srn>, <2svc>, <2tpi>, <2trf>, <2wes>, <2zho>

# For what language each language code corresponds to please look here: https://github.com/JHU-CLSP/Kreyol-MT?tab=readme-ov-file#building-machine-translation-for-latin-american-caribbean-and-colonial-african-creole-languages

inp = tokenizer('Mi tingk se yu de tel mi lai. </s> <2jam>', add_special_tokens=False, return_tensors="pt", padding=True).input_ids

model.eval() # Set dropouts to zero

model_output=model.generate(inp, use_cache=True, num_beams=4, max_length=60, min_length=1, early_stopping=True, pad_token_id=pad_id, bos_token_id=bos_id, eos_token_id=eos_id, decoder_start_token_id=tokenizer._convert_token_to_id_with_added_voc("<2eng>"))

decoded_output=tokenizer.decode(model_output[0], skip_special_tokens=True, clean_up_tokenization_spaces=False)

print(decoded_output)

- Downloads last month

- 13

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.